We use both deep learning and metaheuristic approaches (swarm intelligence, bio-inspired computation).

Deep learning is a machine learning discipline adopting deep (multistrate) neural networks algorithms.

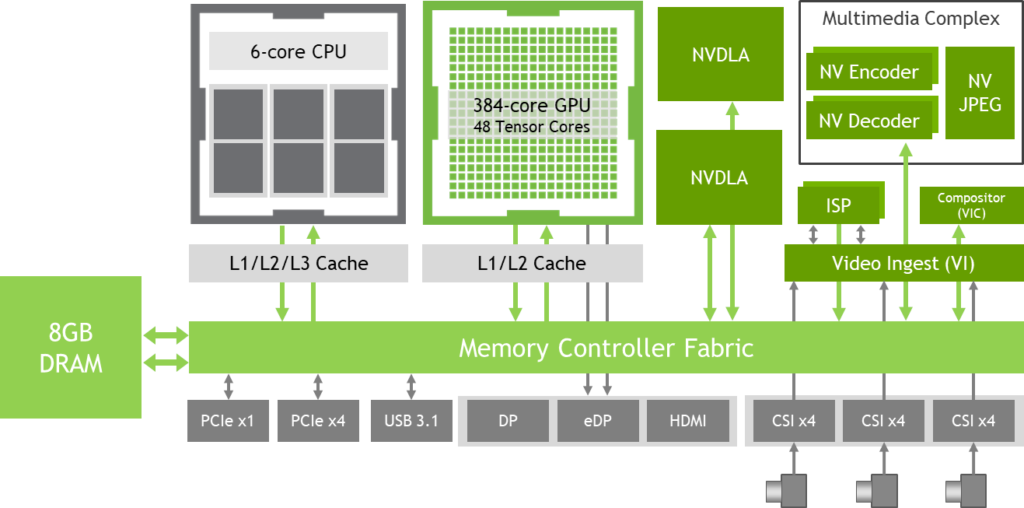

We use NVIDIA Jetson Xavier NX module for our deep learning applications. Jetson Xavier NX is the world’s smallest, most advanced embedded AI supercomputer for autonomous robotics and edge computing devices.

It uses the power of GPU (Graphical Processing Units) from NVIDIA to run neural networks algorithms faster.

Capable of deploying server-class performance in a compact 70x45mm form-factor, Jetson Xavier NX delivers up to 21 TOPS of compute under 15W of power, or up to 14 TOPS of compute under 10W.

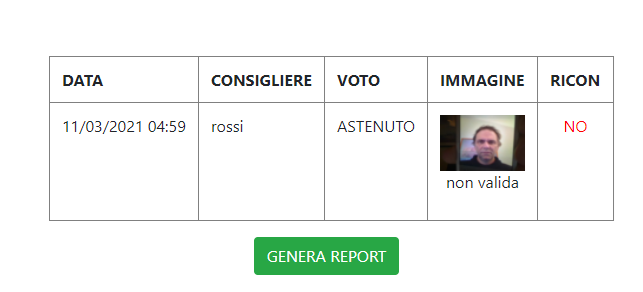

We used Jetson Xavier NX to reinforce identity verification on EDISCO voting system, without the need of implementing structured illumination.

We used a double classificator: the first for facial identification (Resnet 34) and the second (Resnet 50) for the antispoofing function (below).

Having no structured illumination on many client devices, this system is less reliable than identification systems commonly used on smartphones, so we adopted it for reinforcement check only (not for replacing access credentials).

identity reinforcement check (ok)

identity reinforcement check (no ok)

identity reinforcement check (no ok)

Jetson Xavier NX block diagram

Jetson Xavier NX development kit

SWARM INTELLIGENCE

Metaheuristic algorithms are used to deal with highly non-linear optimization problems, characterized by little model accuracy. In such situations, "trial and error" approaches are adopted, improved by selection mechanism and information sharing between multiple simple components or "agents".

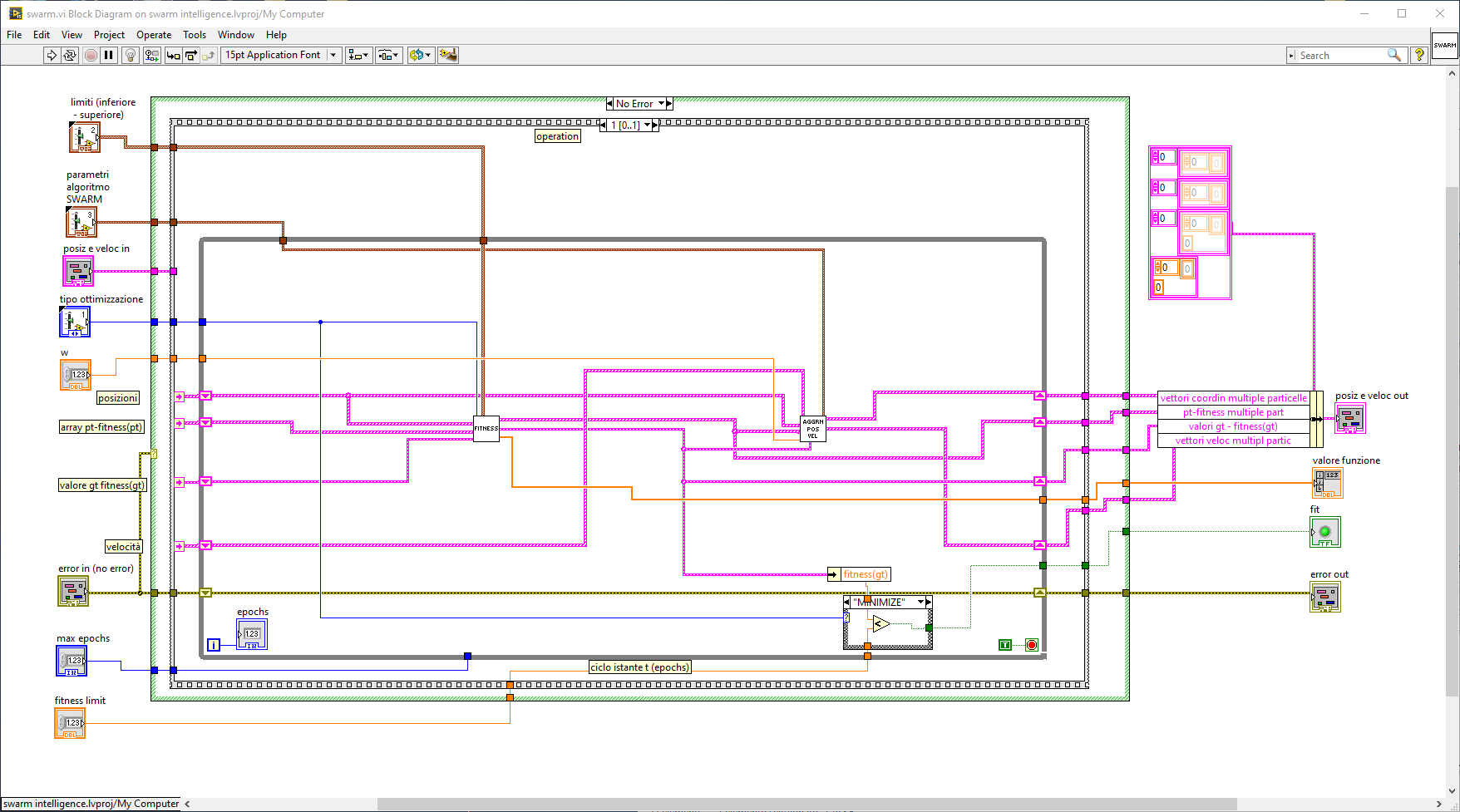

In particular, we used a bio-inspired swarm intelligence algorithm to develop a Labview app for dynamic, non-linear control system modelling.

Bio-Inspired metaheuristic and swarm intelligence algorithms are rather simple to implement and are able to deal with optimization problems that are not solvable by conventional algorithms. Their bio-inspired nature gives them the randomization ability to escape local solutions in an acceptable number of epochs.

Such algorithms can be used to cope with a dynamic environment, while artificial neural networks are often trained on a static dataset, so they never learn new patterns if the environment changes.

Metaheuristic algorithms can replace gradient methods when the objective function has sharp peaks or is not continue. More, they are not problem specific.

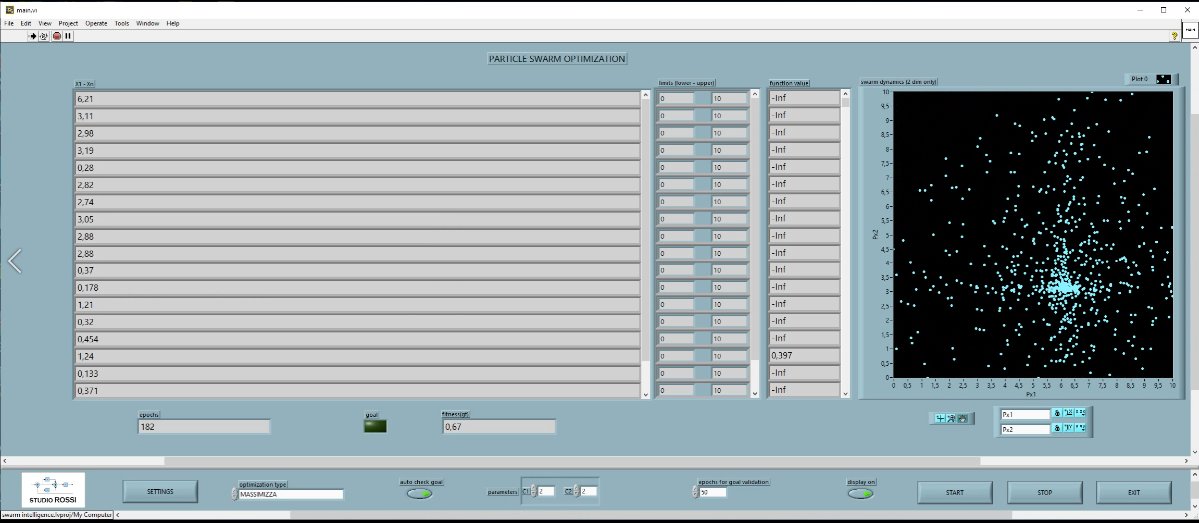

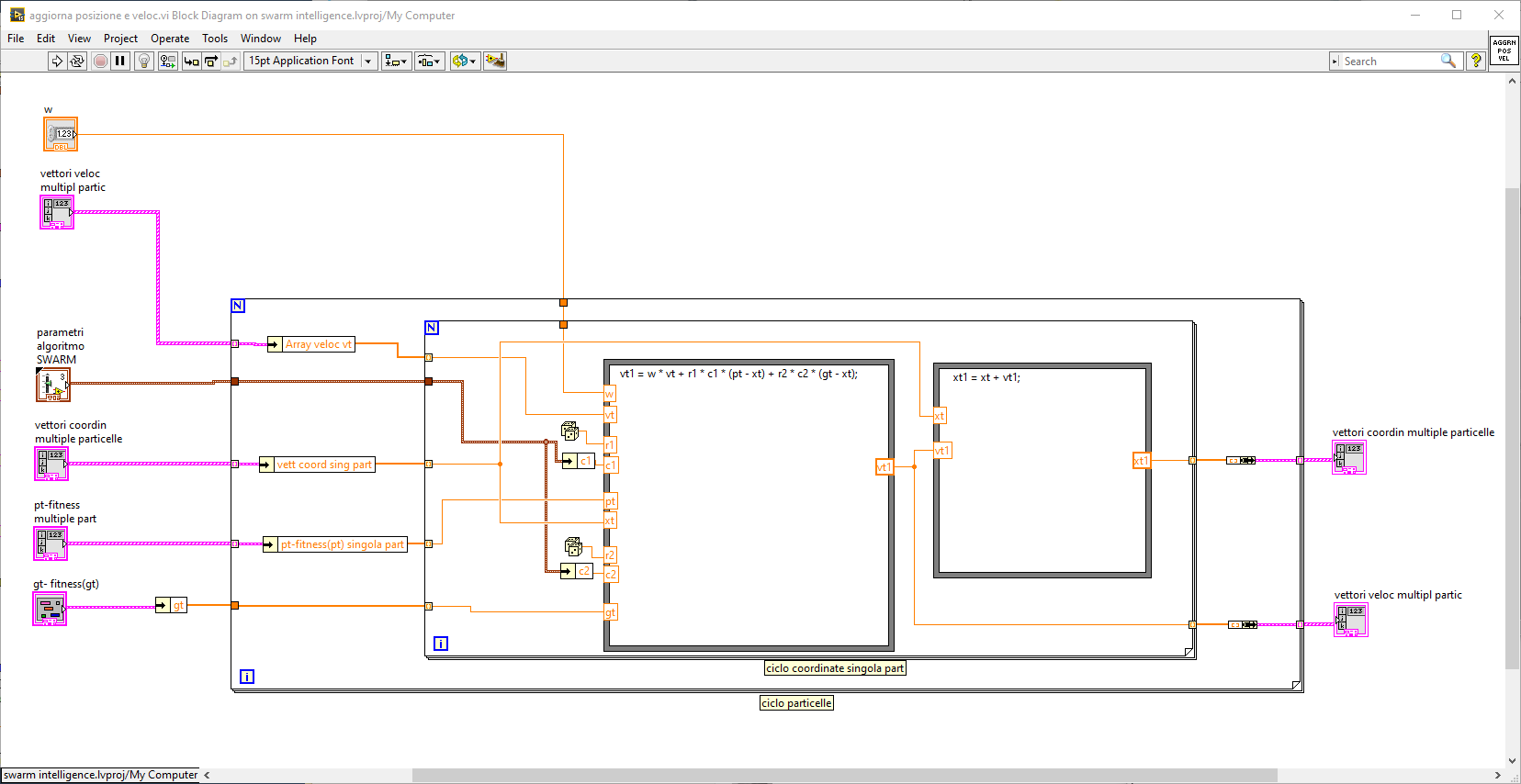

In a swarm intelligence algorithm, each particle is a multidimensional solution which we need to stay very close to the optimum solution; so, each particle is tested by mean of a fitness function and then a velocity vector is provided to it, oriented to the position of the fittest particle in the swarm.

In this manner, information is passed from the best particles to the others in each epoch. A random value weighting is also added, in order to provide the needed diversity and ability to escape any local minimum.

In the video below, a 20-vars function is maximized, so each particle is a vector in a 20-dimensional state-space. The algorithm starts with the swarm being almost equally distributed in the state space; soon, fittest information sharing and random weighting lead the majority of particles to aggregate, so to obtain the optimum.

The routine stops when the obtained result varies very little in a certain number of epochs

(obviously, only two dimensions are shown in the plot).